This article provides step-by-step instructions for reading files from SFTP sources and landing them to BigQuery. The approach for reading from SFTP would be in two stages:

Using an action plugin to read the data from SFTP and landing it on HDFS .

Use File source to read from HDFS transform the data and land it in BigQuery.

Instructions

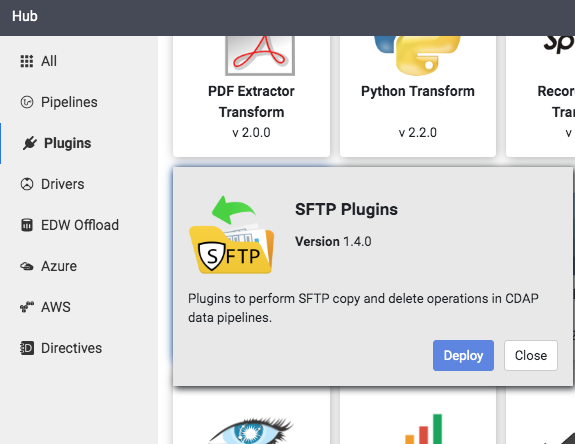

Deploy SFTP plugins from the Hub.

Configure the pipeline with SFTPCopy action to read from SFTP source.

The source directory specifies the directory in SFTP server. The directory can take a wildcard pattern to read the files. The entire file(s) will be copied to the destination specified in the destination directory configuration. The destination directory will be created in Dataproc cluster.

By default, all the files that are copied will be held in a variable calledsftp.copied.file.nameswhich is configurable in the SFTPCopy action plugin configuration.Use a File source to read the contents of the files copied from the SFTPCopy Action.

Configure the Path configuration in File source to be the same as destination directory configuration in SFTPCopy action.Add any additional wrangling steps or any other transformations required to process the data.

Use a BigQuery Sink to write the data to BigQuery Table by configuring the BQ Dataset and Table name.

Optionally, use an SFTPDelete Action if the files read from SFTPCopy should be deleted. The files to be deleted will be fetched by default from sftp.copied.file.names variable.