Introduction

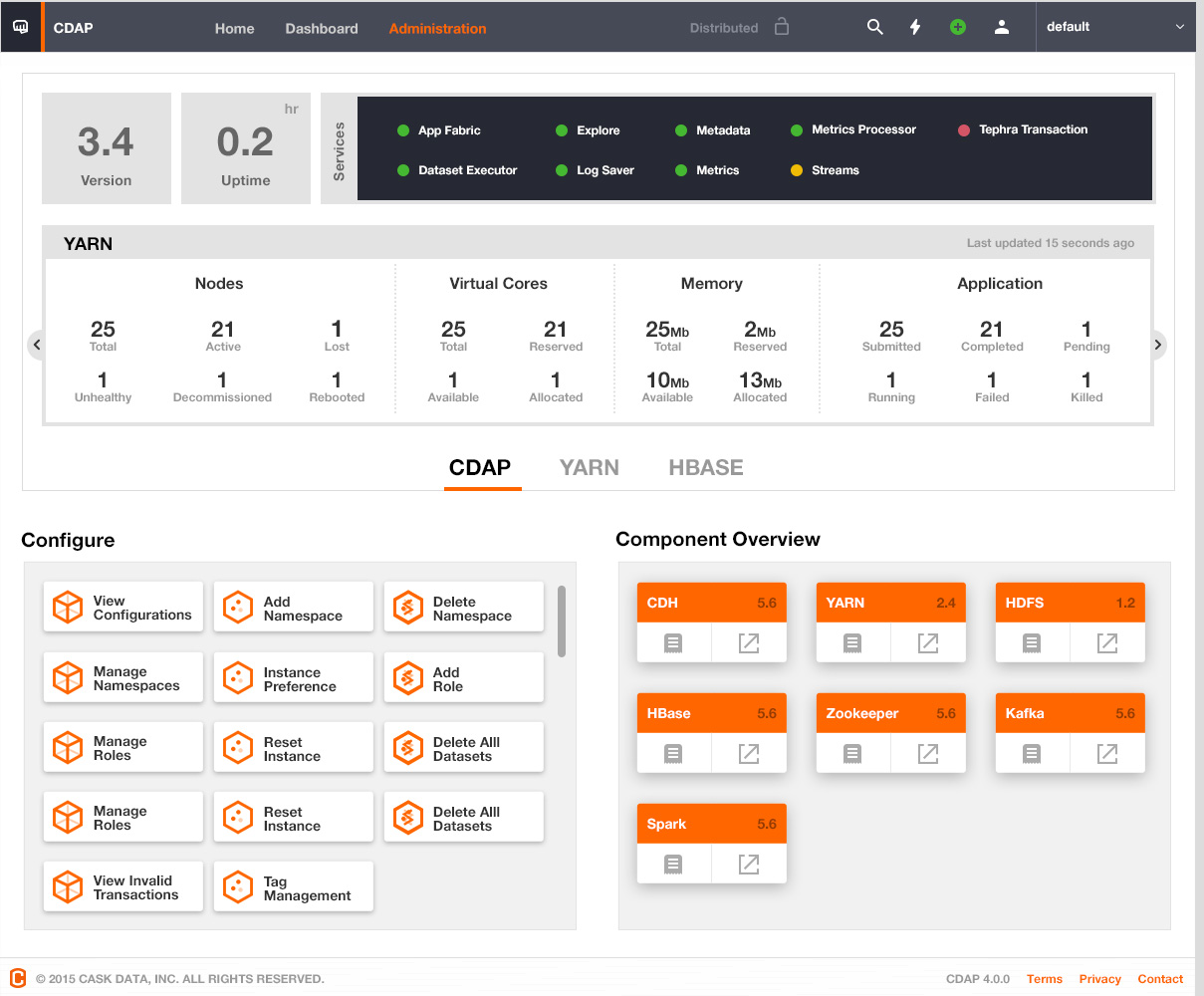

The CDAP 4.0 UI is designed to provide operational insights about both - CDAP services as well as other service providers such as YARN, HBase and HDFS. The CDAP platform will need to expose additional APIs to surface this information.

Goals

The operational APIs should surface information for the Management Screen

These designs translate into the following requirements:

- CDAP Uptime

- P1: Should indicate the time (number of hours, days?) for which the CDAP Master process has been running.

- P2: In an HA environment, it would be nice to indicate the time of the last master failover.

- CDAP System Services:

- P1: Should indicate the current number of instances.

- P1: Should have a way to scale services.

- P1: Should show service logs

- P2: Node name where container started

- P2: Container name

- P2:

master.servicesYARN application name

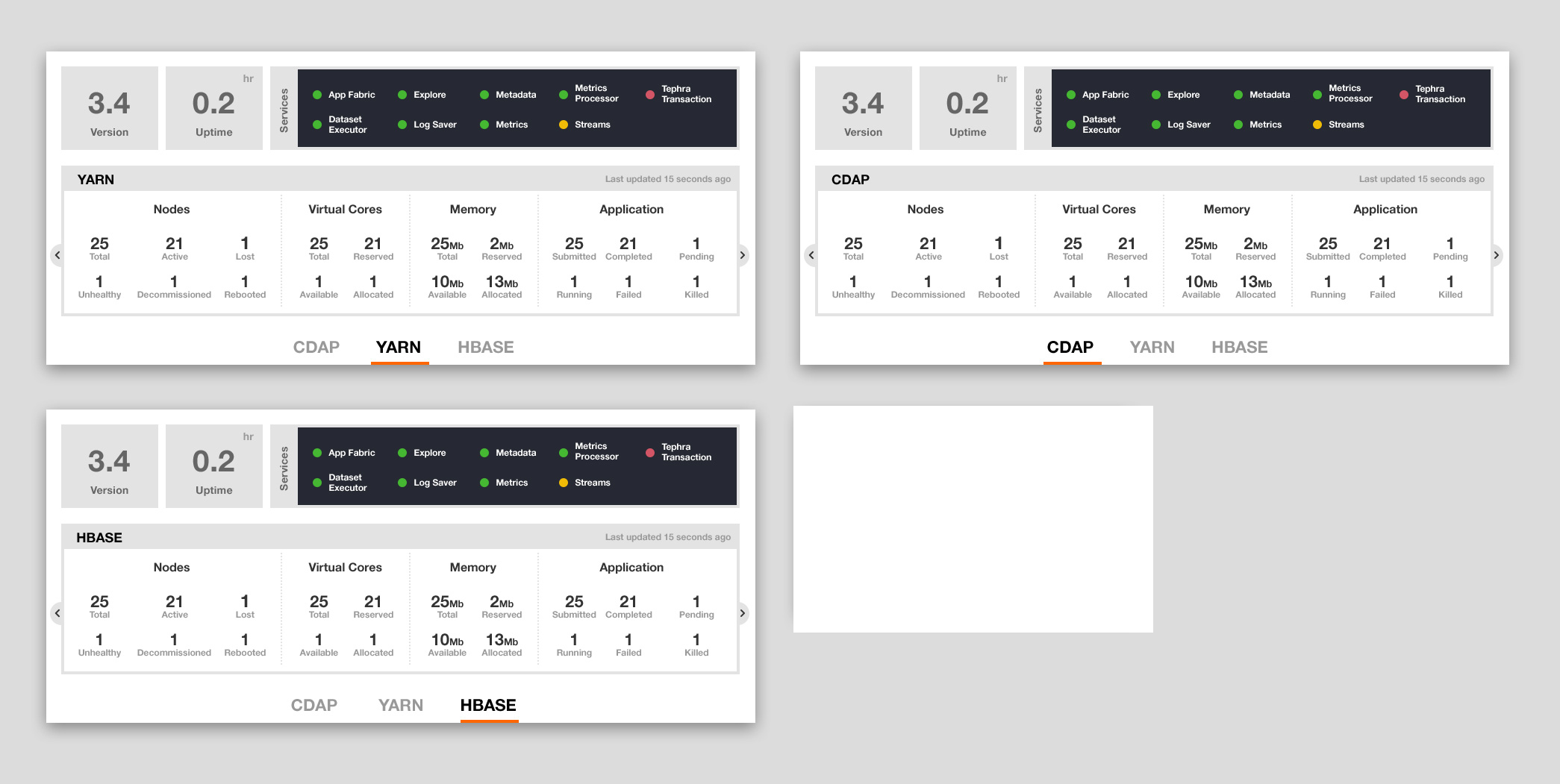

- Middle Drawer:

- CDAP:

- P1: # of masters, routers, kafka-servers, auth-servers

- P1: Router requests - # 200s, 404s, 500s

- P1: # namespaces, artifacts, apps, programs, datasets, streams, views

- P1: Transaction snapshot summary (invalid, in-progress, committing, committed)

- P1: Logs/Metrics service lags

- P2: Last GC pause time

- HDFS:

- P1: Space metrics: yotal, free, used

- P1: Nodes: yotal, healthy, decommissioned, decommissionInProgress

- P1: Blocks: missing, corrupt, under-replicated

- YARN:

- P1: Nodes: total, new, running, unhealthy, decommissioned, lost, rebooted

- P1: Apps: total, submitted, accepted, running, failed, killed, new, new_saving

- P1: Memory: total, used, free

- P1: Virtual Cores: total, used, free

- P1: Queues: total, stopped, running, max_capacity, current_capacity

- HBase

- P1: Nodes: total_regionservers, live_regionservers, dead_regionservers, masters

- P1: No. of namespaces, tables

- P2: Last major compaction (time + info)

- Zookeeper: Most of these are from the output of

echo mntr | nc localhost 2181- P1: Num of alive connections

- P1: Num of znodes

- P1: Num of watches

- P1: Num of ephemeral nodes

- P1: Data size

- P1: Open file descriptor count

- P1: Max file descriptor count

- Kafka

- JMX Metrics that Kafka exposes: https://kafka.apache.org/documentation#monitoring

- P1: # of topics

- P1: Message in rate

- P1: Request rate

- P1: # of under replicated partitions

- P1: Partition counts

- Sentry

- P1: # of roles

- P1: # of privileges

- P1: memory: total, used, available

- P1: requests per second

- any more?

- KMS

- TBD: Having a hard time hitting the JMX endpoint for KMS

- CDAP:

- Component Overview

- P1: YARN, HDFS, HBase, Zoookeeper, Kafka, Hive

- P1: For each component: version, url, logs_url

- P2: Sentry, KMS

- P2: Distribution info

- P2: Plus button - to store custom components and version, url, logs_url for each.

User Stories

- As a CDAP admin, I would like a single place to perform health checks and monitoring for CDAP system services as well as service providers that CDAP depends upon.

- As a CDAP admin, I would like to have insights into the health of all CDAP system services including master, log saver, explore container, metrics processor, metrics, streams, transaction server and dataset executor

- As a CDAP admin, I would like to know information about my CDAP setup including the version of CDAP

- As a CDAP admin, I would like to know the uptime of CDAP including optionally the time since the last failover in an HA scenario

- As a CDAP admin, I would like to know the versions and (optionally) links to the web UI and logs if available of the underlying infrastructure components.

- As a CDAP admin, I would like to have operational insights including stats such as request rate, node status, available compute as well as storage capacity for the underlying infrastructure components that CDAP relies upon. These insights should help me understand the health of these components as well as help in root cause analysis in case CDAP fails or performs poorly.

Design

Data Sources

Versions

- CDAP -

co.cask.cdap.common.utils.ProjectInfo - HBase -

co.cask.cdap.data2.util.hbase.HBaseVersion - YARN -

org.apache.hadoop.yarn.util.YarnVersionInfo - HDFS -

org.apache.hadoop.util.VersionInfo - Zookeeper - No client API available. Will have to build a utility around

echo stat | nc localhost 2181 - Hive -

org.apache.hive.common.util.HiveVersionInfo

URL

- CDAP -

$(dashboard.bind.address) + $(dashboard.bind.port) - YARN -

$(yarn.resourcemanager.webapp.address) - HDFS -

$(dfs.namenode.http-address) - HBase - hbaseAdmin.getClusterStatus().getMaster().toString()

HDFS

DistributedFileSystem - For HDFS stats

YARN

YarnClient - for YARN stats and info

HBase

HBaseAdmin - for HBase stats and info

Kafka

JMX

Reference: https://github.com/linkedin/kafka-monitor

Zookeeper

Option 1: Four letter commands - mntr. Drawbacks: mntr was introduced in 3.5.0 - users may be running older versions of Zookeeper

Option 2: Zookeeper also exposes JMX - https://zookeeper.apache.org/doc/trunk/zookeeperJMX.html

HiveServer2

TBD

Sentry

JMX

The following is available by enabling the sentry web service (ref: http://www.cloudera.com/documentation/enterprise/latest/topics/sg_sentry_metrics.html) and querying for metrics (API: http://[sentry-service-host]:51000/metrics?pretty=true).

KMS

KMS also exposes JMX via the endpoint http://host:16000/kms/jmx.

Implementation

The service provider stats fetchers will be implemented as extensions. Each such extension will be installed by CDAP in the master/ext/operations directory as jar files. Each jar file in this directory will be scanned for implementations of the OperationalStatsFetcher interface defined below. There will be one implementation of this interface for every service provider. A single jar file may contain multiple implementations of this interface. These classes will be loaded in a separate classloader. However, at this point, there will not be classloader isolation. The classloader that loads these classes will have the CDAP system classloader as the parent. CDAP will provide a core extension installed at master/ext/operations/core/cdap-operations-extensions-core which will contain stats for some standard service providers. Additional services can be configured by implementing OperationalStatsFetcher for the service, and placing the jar file under master/ext/operations/

/**

* An interface to allow fetching operational statistics from services such as HDFS, YARN, HBase, etc.

*/

public interface OperationalStatsFetcher {

@interface ServiceName {

String value();

}

String getVersion();

URL getWebURL() throws MalformedURLException;

URL getLogsURL();

NodeStats getNodeStats() throws IOException;

StorageStats getStorageStats() throws IOException;

MemoryStats getMemoryStats();

ComputeStats getComputeStats();

AppStats getAppStats();

QueueStats getQueueStats();

EntityStats getEntityStats();

ServiceStats getServicesStats();

class NodeStats {

int total;

int healthy;

int decomissioned;

}

class StorageStats {

long totalMB;

long usedMB;

long availableMB;

long missingBlocks;

long corruptBlocks;

long underreplicatedBlocks;

}

class MemoryStats {

long totalMB;

long usedMB;

long availableMB;

}

class ComputeStats {

int totalVCores;

int usedVCores;

int availableVCores;

}

class AppStats {

int totalApps;

int runningApps;

int failedApps;

int killedApps;

}

class QueueStats {

int totalQueues;

int usedQueues;

int availableQueues;

}

class EntityStats {

int namespaces;

int artifacts;

int apps;

int programs;

int datasets;

int streams;

int tables;

}

class ServiceStats {

Map<String, Integer> services;

}

}

TODO: CDAP Master Uptime?

Caching

There will not be any caching in these APIs. Since it is ok for the UI to only poll every 15 seconds or even less frequently, it should be ok to hit the service providers for every request.

API changes

New REST APIs

The following REST APIs will be exposed from app fabric.

| Path | Method | Description | Response Code | Response |

|---|---|---|---|---|

| /v3/system/serviceproviders | GET | Lists all the available service providers and (optionally) minimal info about each (version, url and logs_url) | 200 - On success 500 - Any internal errors | |

| /v3/system/serviceproviders/{service-provider-name}/stats | GET | Returns stats for the specified service provider | 200 OK - stats for the specified service provider were successfully fetched 503 Unavailable - Could not contact the service provider for status 404 Not found - Service provider not found (not in the list returned by the list service providers API) 500 - Any other internal errors | TODO: Add responses for Kafka, Zookeeper, Sentry, KMS |

CLI Impact or Changes

New CLI commands will have to be added to front the two new APIs.

List Service Providers

list service providers

Get Service Provider Stats

get stats for service provider <service-provider>

UI Impact or Changes

The Management screen on the CDAP 4.0 UI will have to be implemented using the APIs exposed by this design in addition to existing APIs for getting System Service Status and Logs

Security Impact

Currently CDAP does not enforce authorization for the system services APIs -

ADMIN privileges on the CDAP instance should be able to execute these APIs successfully.Impact on Infrastructure Outages

Test Scenarios

| Test ID | Test Description | Expected Results |

|---|---|---|

| T1 | Positive test for list API | Should return all the configured service providers |

| T2 | Positive test for stats of each service provider | Should return the appropriate details for each service provider |

| T3 | Stop a configured storage provider and hit the API to get its stats | Should return 503 with a proper error message |

| T4 | Hit the API to get stats of a non-existent API | Should return 404 with a proper error message |

Releases

Release 4.0.0

- Ground work for collecting stats from infrastructure components.

- Focus on HDFS, YARN, HBase

Release 4.1.0

- More components such as Hive, Kafka, Zookeeper, Sentry, KMS (in that order).

Related Work

Future work

- TBD