...

It is recommended to always have the Instrumentation setting on unless the environment is short on resources.

For streaming pipelines, you can also set the Batch interval (seconds/minutes) for streaming data.

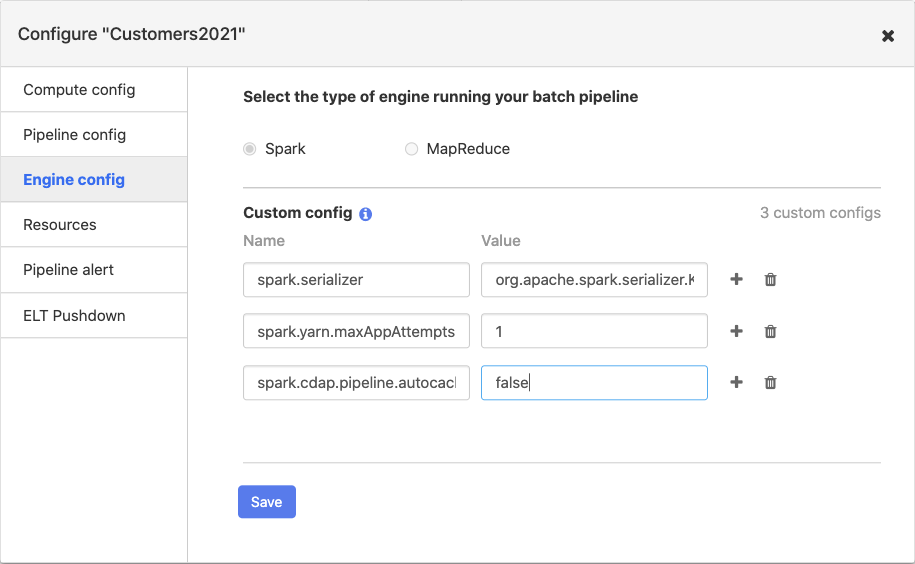

Engine config

For each pipeline, you can choose Spark or MapReduce as the execution engine. Spark is the default execution engine. You can also add custom configurations, which allow you to add additional engine configurations. Typically, custom configurations apply to Spark and not as frequently to MapReduce.

Spark engine configs

Here are some examples of custom configurations that are commonly used for Spark:

To improve pipeline performance, add

spark.serializeras the Name andorg.apache.spark.serializer.KryoSerializeras the Value.To workaround a bug in Spark versions prior to version 2.4, enter

spark.maxRemoteBlockSizeFetchToMemas the Name and2147483135as the Value.if you don’t want Spark to retry upon failure, enter

spark.yarn.maxAppAttemptsas the Name and1as the Value. If you want Spark to retry multiple times, set the value to the number of retries you want Spark to perform.To turn off auto-caching in Spark, enter

spark.cdap.pipeline.autocache.enableas the Name, andfalseas the Value. By default, pipelines will cache intermediate data in the pipeline in order to prevent Spark from re-computing data. This requires a substantial amount of memory, so pipelines that process a large amount of data will often need to turn this off.To disable pipeline task retries, enter

spark.task.maxFailuresas the Name and1as the Value.

...

For common Spark settings, see Parallel Processing.

MapReduce engine configs (deprecated)

Here’s an example of a custom configuration used for MapReduce

...

To run a database query when a pipeline run finishes, configure the following properties. You can validate the properties before you save the configuration.

Property | Description |

|---|---|

Run Condition | When to run the action. Must be completion, success, or failure. Defaults to success. If set to completion, the action will be performed regardless of whether the pipeline run succeeds or fails. If set to success, the action will only be performed if the pipeline run succeeds. If set to failure, the action will only be performed if the pipeline run fails. |

Plugin name | Required. Name of the JDBC plugin to use. This is the value of the ‘name’ key defined in the JSON file for the JDBC plugin. |

Plugin type | The type of JDBC plugin to use. This is the value of the ‘type’ key defined in the JSON file for the JDBC plugin. Defaults to ‘jdbc’. |

JDBC connection string | Required. JDBC connection string including database name. |

Query | Required. The database command to run. |

Credentials - Username | User to use to connect to the specified database. Required for databases that need authentication. Optional for databases that do not require authentication. |

Credentials - Password | Password to use to connect to the specified database. Required for databases that need authentication. Optional for databases that do not require authentication. |

Advanced - Connection arguments | A list of arbitrary string tag/value pairs as connection arguments. This is a semicolon-separated list of key/value pairs, where each pair is separated by an equals ‘=’ and specifies the key and value for the argument. For example, ‘key1=value1; ‘key2=value2’ specifies that the connection will be given arguments ‘key1’ mapped to ‘value1’ and the argument ‘key2’ mapped to ‘value2’. |

Advanced - Enable auto-commit | Whether to enable auto commit for queries run by the source. Defaults to false. This setting should only matter if you are using a JDBC driver that does not support the commit call |

. |

Making an HTTP call

To call an HTTP endpoint when a pipeline run finishes, configure the following properties. You can validate the properties before you save the configuration.

Property | Description |

|---|---|

Run Condition | When to run the action. Must be completion, success, or failure. Defaults to completion. If set to completion, the action will be performed regardless of whether the pipeline run succeeds or fails. If set to success, the action will only be performed if the pipeline run succeeds. If set to failure, the action will only be performed if the pipeline run fails. |

URL | Required. The URL to fetch data from. |

HTTP Method | Required. The http request method. Choose from: DELETE, GET, HEAD, OPTIONS, POST, PUT. Defaults to POST. |

Request body | The http request body. |

Number of Retries | The number of times the request should be retried if the request fails. Defaults to 0 |

Should Follow Redirects | Whether to automatically follow redirects. Defaults to true. |

Request Headers | Request headers to set when performing the http request. |

Generic - Connection Timeout | Sets the connection timeout in milliseconds. Set to 0 for infinite. Default is 60000 (1 minute). |

Transformation pushdown

Starting in CDAP 6.5.0, you can enable Transformation pushdown to have BigQuery process joins. For more information, see Using Transformation pushdown.